Kafka Logs & Metrics Monitoring

Monitor the key metrics from your Kafka brokers, producers, consumers, and ZooKeeper Ensemble actively to maintain optimal performance and ensure smooth operation of your Kafka cluster.

Unified Kafka Broker Metric Dashboard

Effortlessly monitor critical performance indicators across all brokers in real time through a unified dashboard. Track metrics such as throughput, latency, and storage utilization seamlessly in one place. Simplify your monitoring workflow with a consolidated dashboard that offers a holistic view of the performance of your Kafka infrastructure.

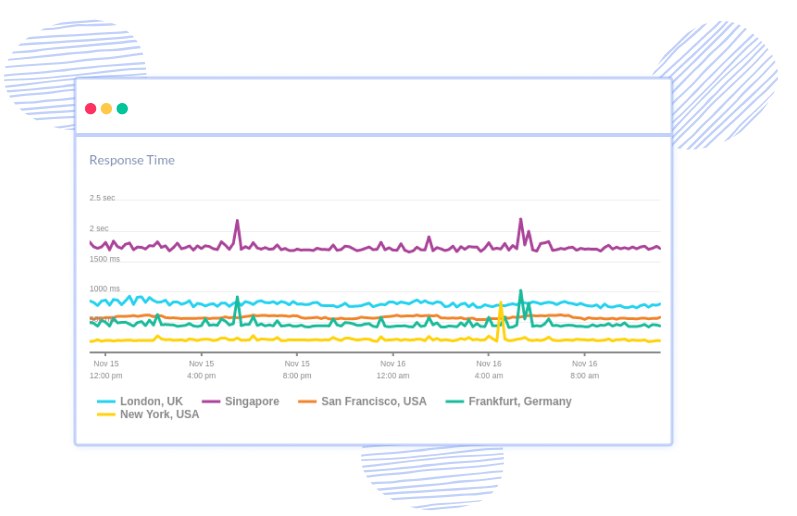

Monitor Kafka Consumer Lag and Latency

Ensure seamless data flow and maintain optimal performance levels with precise insights into message processing delays and system responsiveness. Stay ahead of challenges, optimize resource utilization, and uphold the reliability of your Kafka clusters.

Real-time Kafka Log Events

With instant visibility into log data, you can quickly identify anomalies, troubleshoot errors, and ensure the smooth operation of your data pipelines, ultimately enhancing efficiency and reliability across your entire system and enabling timely intervention to prevent downtime.

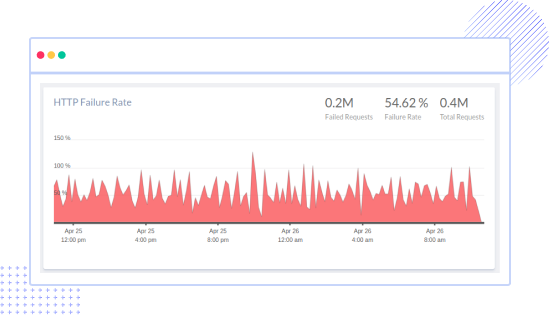

Anticipate Malfunctions and Prevent Downtime

Gain real-time insights into Kafka's operational health to detect misconfigurations, logical errors, or scalability issues proactively. Identify overly restrictive timeout parameters or mishandled request rate quotas, ensuring uninterrupted operations and minimizing the risk of service disruptions.

+1-760-465-2330

+1-760-465-2330